Psychological Research

Methods of Data Collection

Regardless of the research method, data collection will be necessary. The method of data collection selected will primarily depend on the type of information the researcher needs for their study; however, other factors, such as time, resources, and even ethical considerations, can influence the selection of a data collection method. All of these factors must be considered when selecting a data collection method, as each method has unique strengths and weaknesses. We will discuss the uses and assessment of the most common data collection methods: observation, surveys, archival data, and tests.

Observation

The observational method involves observing and recording specific behaviors of participants. In general, observational studies have the strength of allowing researchers to observe people’s behavior firsthand. However, observations may require more time and manpower than other data collection methods, often resulting in smaller samples of participants. Researchers may spend a significant amount of time waiting to observe a behavior, or the behavior may never occur during the observation. It is important to remember that people tend to change their behavior when they know they are being watched (known as the Hawthorne effect).

Observations may be done in a naturalist setting to reduce the likelihood of the Hawthorne effect. During naturalistic observations, the participants are in their natural environment and are usually unaware that they are being observed. For example, observing students participating in their class would be a naturalistic observation. The downside of a naturalistic setting is that the researcher doesn’t have control over the environment. Imagine that the researcher goes to the classroom to observe the students, and a substitute teacher is present. The change in instructor that day could impact student behavior and skew the data.

If controlling the environment is a concern, a laboratory setting may be a better choice. In the laboratory environment, the researcher can manage confounding factors or distractions that might impact the participants’ behavior. Of course, there are expenses associated with maintaining a laboratory setting, which increases the cost of the study and would not be incurred with naturalist observations. And again, the Hawthorne effect may impact behavior.

Surveys

Surveys are familiar to most people because they are so widely used. This method enhances accessibility to subjects because it can be conducted in person, over the phone, through the mail, or online, and is commonly used by researchers to gather information on many variables in a relatively short period of time.

Most surveys involve asking a standard set of questions to a group of participants. In a highly structured survey, subjects are forced to choose from a response set such as “strongly disagree, disagree, undecided, agree, strongly agree”; or “0, 1-5, 6-10, etc.” One of the benefits of having forced-choice items is that each response is coded, allowing the results to be quickly entered and analyzed using statistical software. While this type of survey typically yields surface-level information on a wide variety of factors, it may not provide an in-depth understanding of human behavior.

Of course, surveys can be designed in various ways. Some surveys ask open-ended questions, allowing each participant to devise their own response, allowing for a variety of answers. This variety may provide deeper insight into the subject than forced-choice questions, but it makes comparing answers challenging. Imagine a survey question that asked participants to report how they are feeling today. If there were 100 participants, there could be 100 different answers, which would be more challenging and require more time to code and analyze.

Surveys are useful for examining stated values, attitudes, and opinions, as well as for reporting on practices. However, they are based on self-reports, and this can limit accuracy. For various reasons, people may not provide honest or complete answers. Participants may be concerned about projecting a particular image through their responses, be uncomfortable answering the questions, inaccurately assess their behavior, or lack awareness of the behavior being assessed. So, while surveys can provide a lot of information for many participants quickly and easily, self-reporting may not be as accurate as other methods.

Content Analysis of Archival Data

Content analysis involves examining media such as historical texts, images, commercials, lyrics, or other materials to identify patterns or themes in culture. An example of content analysis is the classic history of childhood by Aries (1962), titled “Centuries of Childhood,” or the analysis of television commercials for sexual or violent content, or for ageism. Passages in texts or television programs can also be randomly selected for analysis. Again, one advantage of analyzing work such as this is that the researcher does not have to incur the time and expense of finding respondents; however, the researcher cannot know how accurately the media reflects the actions and sentiments of the population.

Secondary content analysis, also known as archival research, involves analyzing information that has already been collected or examining documents or media to uncover attitudes, practices, or preferences. Several data sets are available for those who wish to conduct this type of research. The researcher conducting secondary analysis does not have to recruit subjects, but does need to be aware of the quality of the information collected in the original study. And unfortunately, the researcher is limited to the questions asked and data collected originally.

Tests

Many variables studied by psychologists—perhaps the majority—are not so straightforward or simple to measure. These kinds of variables are called constructs and include personality traits, emotional states, attitudes, and abilities. Psychological constructs cannot be observed directly. One reason is that they often represent tendencies to think, feel, or act in certain ways. For example, to say that a particular college student is highly extroverted does not necessarily mean that she is behaving in an extroverted way right now. Another reason psychological constructs cannot be observed directly is that they often involve internal processes, like thoughts or feelings. For these psychological constructs, we require an alternative means of collecting data. Tests will serve this purpose.

Many variables studied by psychologists—perhaps the majority—are not so straightforward or simple to measure. These kinds of variables are called constructs and include personality traits, emotional states, attitudes, and abilities. Psychological constructs cannot be observed directly. One reason is that they often represent tendencies to think, feel, or act in certain ways. For example, to say that a particular college student is highly extroverted does not necessarily mean that she is behaving in an extroverted way right now. Another reason psychological constructs cannot be observed directly is that they often involve internal processes, like thoughts or feelings. For these psychological constructs, we require an alternative means of collecting data. Tests will serve this purpose.

A good test will help researchers assess a particular psychological construct. What is a good test? Researchers want a test that is standardized, reliable, and valid. A standardized test is one that is administered, scored, and analyzed in the same way for each participant. This minimizes differences in test scores due to confounding factors, such as variability in the testing environment or scoring process, and assures that scores are comparable. Reliability refers to the consistency of a measure. Researchers consider three types of consistency: over time (test-retest reliability), across items (internal consistency), and across different researchers (interrater reliability). Validity is the extent to which the scores from a measure represent the variable they are intended to measure. When a measure exhibits good test-retest reliability and internal consistency, researchers can be more confident that the scores accurately represent what they are intended to measure.

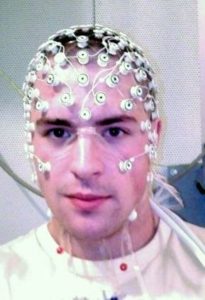

There are various types of tests used in psychological research. Self-report measures are those in which participants report their own thoughts, feelings, and actions, such as the Rosenberg Self-Esteem Scale or the Big Five Personality Test. Some tests measure performance, ability, aptitude, or skill, like the Stanford-Binet Intelligence Scale or the SATs.Some tests measure physiological states, including electrical activity or blood flow in the brain.

Video 1. Methods of Data Collection explains various means for gathering data for quantitative and qualitative research. A closed-captioned version of this video is available here.

Reliability and Validity

Everyday Connection: How Valid Is the SAT?

Standardized tests like the SAT are supposed to measure an individual’s aptitude for a college education, but how reliable and valid are such tests? Research conducted by the College Board suggests that scores on the SAT have high predictive validity for first-year college students’ GPA (Kobrin, Patterson, Shaw, Mattern, & Barbuti, 2008). In this context, predictive validity refers to the test’s ability to effectively predict the GPA of college freshmen. Given that many institutions of higher education require the SAT for admission, this high degree of predictive validity might be comforting.

However, the emphasis placed on SAT scores in college admissions has generated controversy on several fronts. For one, some researchers assert that the SAT is a biased test that places minority students at a disadvantage and unfairly reduces the likelihood of being admitted into a college (Santelices & Wilson, 2010). Additionally, some research has suggested that the predictive validity of the SAT is overstated in its ability to predict the GPA of first-year college students. In fact, it has been suggested that the SAT’s predictive validity may be overestimated by as much as 150% (Rothstein, 2004). Many institutions of higher education are beginning to reconsider the significance of SAT scores in admission decisions (Rimer, 2008).

In 2014, College Board President David Coleman acknowledged the existence of these problems, recognizing that high school grades more accurately predict college success than SAT scores. To address these concerns, he has called for significant changes to the SAT exam (Lewin, 2014).